ggml.ai

About ggml.ai

ggml.ai is an innovative tensor library tailored for machine learning enthusiasts and developers. It enables efficient on-device inference, supporting large models and high performance on commodity hardware. With a focus on simplicity and open development, ggml.ai empowers contributors to explore and innovate in the AI space.

ggml.ai offers access to its core library under the MIT license. While additional extensions may be commercialized in the future, users enjoy free access to foundational features. Contributions from the community can elevate user engagement through potential sponsorships and collaborative development.

ggml.ai boasts a user-friendly interface that enables seamless navigation and exploration of its features. The design prioritizes simplicity while showcasing innovative aspects of the library, making it accessible for developers and learners alike, ultimately enhancing the user experience in AI development.

How ggml.ai works

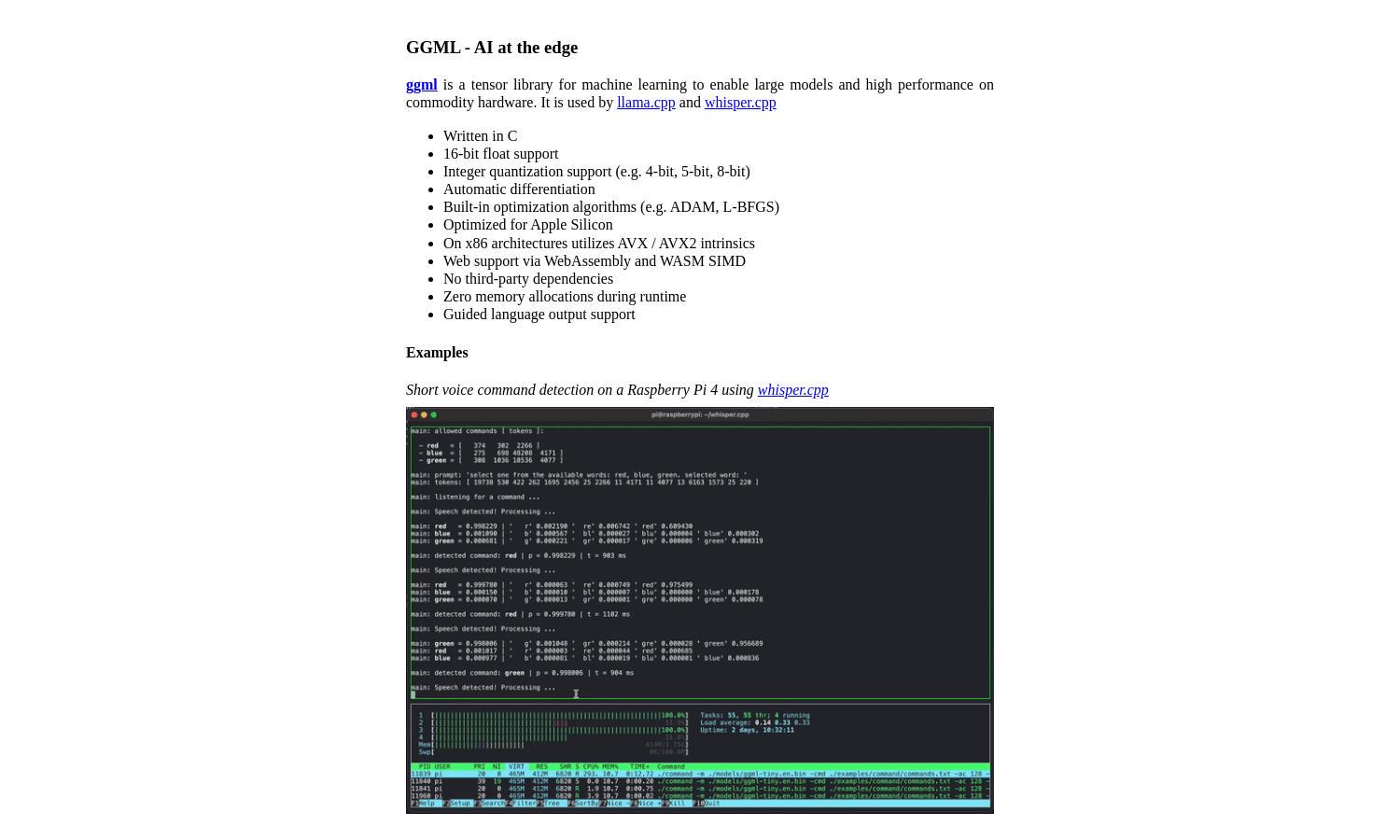

Users interact with ggml.ai by accessing the open-source tensor library, which supports efficient model inference. Upon onboarding, developers can navigate its minimalistic features for machine learning, leveraging automatic differentiation and optimization algorithms. The straightforward architecture fosters experimentation, encouraging contributors to share innovative ideas and solutions.

Key Features for ggml.ai

Integer Quantization

The integer quantization feature of ggml.ai allows for efficient performance optimization on constrained hardware. By converting models into integer representations, users can achieve faster inference times and reduced memory usage, making ggml.ai ideal for mobile devices and edge computing applications.

Cross-Platform Compatibility

ggml.ai’s cross-platform compatibility ensures that it functions seamlessly across various operating systems, including Mac, Windows, Linux, iOS, and Android. This versatility allows developers to deploy machine learning models effortlessly on different devices, broadening the accessibility and utility of their applications.

Automatic Differentiation

Automatic differentiation in ggml.ai simplifies the process of gradient computation for machine learning models. This advanced feature enhances the efficiency of training algorithms, allowing developers to seamlessly integrate optimization methods like ADAM and L-BFGS, thus accelerating model development and performance.

You may also like: