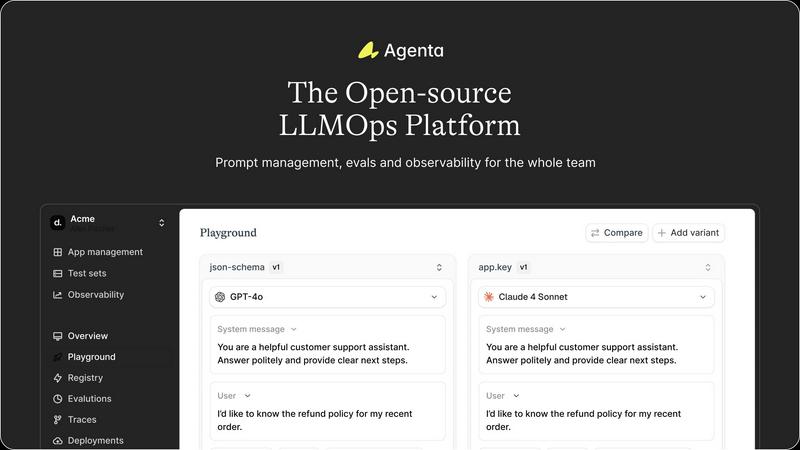

Agenta

Agenta is an open-source LLMOps platform for building, evaluating, and debugging reliable LLM applications.

Visit

About Agenta

Agenta is an open-source LLMOps platform engineered to provide AI development teams with the infrastructure required to build, evaluate, and ship reliable Large Language Model (LLM) applications. It addresses the core challenges of unpredictability and fragmented workflows inherent in LLM development by centralizing the entire lifecycle into a single, collaborative platform. Agenta is designed for cross-functional teams comprising developers, product managers, and subject matter experts who need to move beyond ad-hoc prompt management and "vibe testing" towards a structured, evidence-based process. Its primary value proposition lies in integrating three critical pillars of LLMOps: a unified experimentation playground, systematic automated evaluation, and comprehensive production observability. By serving as the single source of truth for prompts, tests, and traces, Agenta enables teams to version control experiments, validate changes with automated and human-in-the-loop evaluations, and swiftly debug issues using real production data, thereby significantly reducing time-to-production for robust AI agents.

Features of Agenta

Unified Experimentation Playground

The platform provides a centralized, model-agnostic interface where teams can experiment with and compare different prompts, parameters, and foundation models side-by-side. This environment allows for rapid iteration without vendor lock-in, supporting models from any provider. Crucially, it maintains a complete version history of all prompts and configurations, enabling developers to track changes, revert if necessary, and understand the evolution of their application's logic. The playground is directly connected to production data, allowing any error or trace from a live system to be instantly saved as a test case for further experimentation.

Automated and Integrated Evaluation Framework

Agenta replaces guesswork with a systematic evaluation process. Teams can configure and run automated evaluations using a variety of methods, including LLM-as-a-judge setups, built-in metrics (e.g., for correctness, relevance), or custom code evaluators. The platform supports evaluation of complex, multi-step agentic workflows by allowing assessment of each intermediate step in a trace, not just the final output. This granular insight is critical for debugging reasoning errors. Furthermore, it seamlessly integrates human evaluation, enabling domain experts and product managers to provide feedback directly within the evaluation workflow.

Production Observability and Trace Analysis

Every LLM application request is captured as a detailed trace within Agenta. This observability layer provides full visibility into the execution chain, allowing teams to pinpoint exact failure points in complex pipelines. Traces can be annotated collaboratively by the team or used to gather direct user feedback. A key capability is the one-click conversion of any production trace into a persistent test case, effectively closing the feedback loop between observation, debugging, and validation. The platform also supports live monitoring with online evaluations to detect performance regressions in real-time.

Collaborative Workflow for Cross-Functional Teams

Agenta breaks down silos by providing tools accessible to both technical and non-technical roles. It offers a secure UI for domain experts to edit and experiment with prompts without writing code. Product managers and experts can independently run evaluations and compare experiment results through the graphical interface. The platform ensures parity between its full-featured API and UI, allowing programmatic workflows from developers and manual workflows from other stakeholders to integrate into one centralized hub, fostering collaboration across the entire AI product team.

Use Cases of Agenta

Rapid Prototyping and Iteration of LLM Applications

Development teams use Agenta's unified playground to quickly prototype new LLM features. They can test multiple prompt variations, model providers (OpenAI, Anthropic, open-source models), and parameters concurrently. The integrated version history allows them to methodically track which changes led to improvements, creating a reproducible experimentation process that accelerates the initial development cycle and reduces time from idea to a working prototype.

Systematic Performance Validation Before Deployment

Before shipping updates to production, engineering and product teams employ Agenta's evaluation framework to validate changes. They can run automated test suites against curated datasets, utilize LLM judges to assess output quality, and incorporate feedback from domain experts through human evaluation loops. This process ensures that new prompts or model configurations actually enhance performance and do not introduce regressions, moving from "yolo deployments" to evidence-based releases.

Production Debugging and Root Cause Analysis

When an LLM application exhibits unexpected behavior or errors in production, engineers leverage Agenta's observability tools. They examine detailed request traces to isolate the failing component—be it a specific prompt, a tool call, or a model response. The ability to instantly save a problematic trace as a test case allows them to reproduce and fix the issue in the playground, creating a robust feedback loop that turns production incidents into actionable improvements.

Collaborative Prompt Management with Domain Experts

In applications requiring specialized knowledge (e.g., legal, medical, customer support), Agenta facilitates collaboration. Subject matter experts can access a safe UI to refine and tweak prompts based on their expertise, without relying on developers for every minor change. This empowers experts to directly influence the AI's behavior, while developers maintain oversight through version control and integration pipelines, ensuring quality and consistency.

Frequently Asked Questions

Is Agenta an open-source platform?

Yes, Agenta is fully open-source. The complete source code is available on GitHub, allowing users to inspect, modify, and deploy the platform on their own infrastructure. This model provides transparency, avoids vendor lock-in, and enables customization to fit specific organizational needs. The community is actively encouraged to contribute to the codebase, report issues, and suggest new features.

How does Agenta integrate with existing LLM frameworks and models?

Agenta is designed to be framework-agnostic and model-agnostic. It offers seamless integration with popular development frameworks like LangChain and LlamaIndex through its SDK. Furthermore, it supports any LLM provider (OpenAI, Anthropic, Azure OpenAI, Cohere, open-source models via Ollama, etc.) via its unified API, allowing teams to freely compare and switch between models without rewriting their application logic.

Can non-technical team members use Agenta effectively?

Absolutely. A core design principle of Agenta is to bridge the gap between technical developers and non-technical stakeholders. The platform provides a user-friendly web UI that enables product managers, domain experts, and other collaborators to participate in the LLM development process. They can experiment with prompts in the playground, configure and view evaluation results, and annotate production traces without needing to write or understand code.

What is the difference between offline and online (live) evaluations in Agenta?

Offline evaluations are run on static datasets or test sets to validate changes before deployment. Online evaluations, or live evaluations, are performed on real-time production traffic to continuously monitor application health and performance. Agenta supports both. Online evaluations can detect regressions as they happen by applying scoring functions to live requests, providing an early warning system for issues that may not have been caught during offline testing.

You may also like:

Blueberry

Blueberry is a Mac app that combines your editor, terminal, and browser in one workspace. Connect Claude, Codex, or any model and it sees everything.

Anti Tempmail

Transparent email intelligence verification API for Product, Growth, and Risk teams

My Deepseek API

Affordable, Reliable, Flexible - Deepseek API for All Your Needs